Learning to Transfer with von Neumann Conditional Divergence

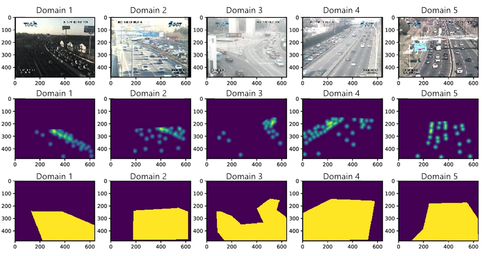

Learning paradigms designed for multiple domains or tasks, such as multitask learning, continual learning and domain adaptation, aim to reduce the large amount of energy and manual labor needed to retrain machine learning models. In this work, we introduce a domain adaptation approach that exploits learned features from relevant source tasks to reduce the data required for learning the new target task.